In a few days, my new OLED display should arrive in the post. In the meantime I’ve been brushing up on my understanding of SPI to control it. Rather than test via an embedded device, I’d like to confirm it’s working OK by driving it directly from my PC. I bought an FT232H board a while ago, so this is a good opportunity to try it out.

Author: admin

I have a few ideas I’d like to prototype for live performance using MIDI loopers rather than audio loopers. I have sketched early ideas but I’d like to experiment with the project in earnest. I’m tinkering with tools before mocking up some early designs.

// Call the passed function object N times. This 'unrolls' the loop

// explicitly in assembly code, rather than creating a runtime loop.

template <unsigned int N, typename Fn>

void unroll(Fn&& fn) {

if constexpr (N != 0) {

unroll<N - 1>(fn);

fn(N - 1);

}

}

// extern function ensures the loop is not optimised away

extern void somethin(unsigned int i);

// Call somethin 4 times, passing the count each time

void four_somethins() {

unroll<4>([](unsigned int i) {

somethin(i);

});

}

Rolling out Factors

Today’s post is an algorithm for ‘rolling’ out factors from any number. It determines all of the factors of that number, and hence whether it is prime.

This has grown from various doodles on paper, forming the idea that all natural numbers have a shape, a hyper-volume of N dimensions, where N is the total number of factors for that number.

Last Monday of 2019

I’ve been exploring two things today: a sort of 2D to 3D tunnel concept I’d like to render and; more experiments photographing bits of trees for my ‘overlaying stuff’ projects (working title…)

I’ve uploaded recent AARC jams between Ryan and I onto Bandcamp. The first of these is a practice for a live gig at the Modular Meetup in London and the second is the gig itself:

Now to the meat of the problem, how the tasks actually run. Some of these tasks are going to run in parallel and that could cause threading issues. Looking at the original challenge there is an obvious sticking point. The two tasks ‘Preheat heater’ and ‘Mix reagent’ need to notify ‘Heat Sample’ they are done in a thread safe way so I will need a mutex. Alternatively I could use a thread safe boost::signal to inform the node that a task has finished, but that’s a bit heavyweight.

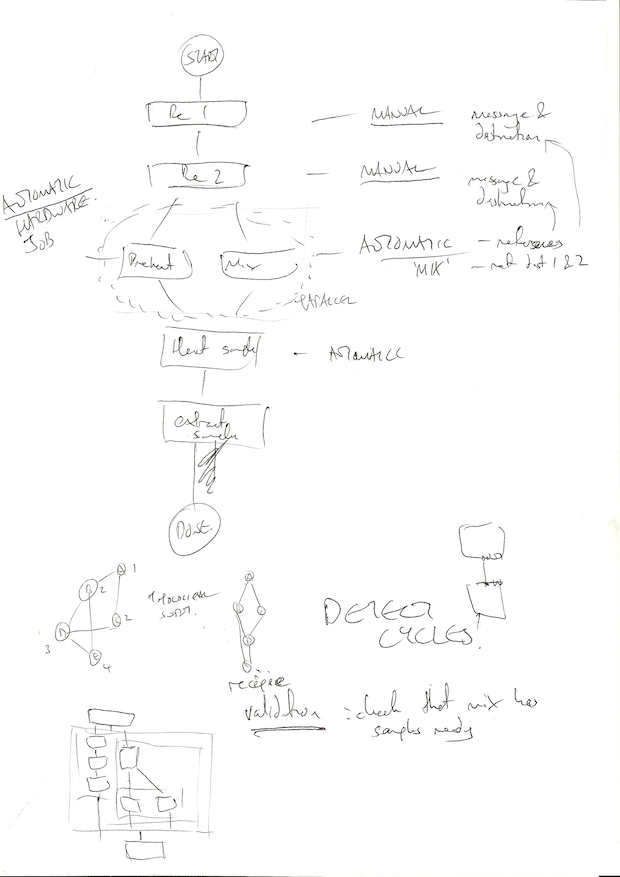

These are some embarrassingly loose sketches made en route to a friend’s leaving do. I wanted to cement my ideas about the builder class and these led into the idea of how one defines a hardware device. I was getting painfully close to template magic with these ideas but wanted to get an idea for the most expressive DSL for a recipe.

Nanopore Technical Challenge Day 1 … part 2

I sketched out a few early ideas in code to get a feel on good approaches in software. As the DAG is being generated by a builder, I wanted to see if it was possible to use std::array for inputs rather than std::vector. Regardless, my first doodle was more just a simple DAG structure exploring the concepts of adding hardware dependencies: https://godbolt.org/g/5y3Gsa

This was very useful in identifying a minimal DAG node, called a Task in this example. A few points:

- Technically I don’t need to store the inputs as I’ll be processing this DAG top to bottom.

- The idea of serial and parallel operations being simple derivatives of task

- All operations on the device would be task derivatives.

- Avoid cycles during insertion (possible to static assert if DAG size is known at constrcution?)

The main() block makes a conceptual jump into a nice syntax for a builder class that builds up the DAG in a readable way, sort of like a DSL for lab protocols. The base TaskBuilder class is responsible for generating a single DAG node. The TaskBuilderContainer is responsible for a list of DAG nodes, and is derived from Task with an extra method end() to indicate the end of a scope block.

These builder classes are nicely wrapped up readable class methods like add_ingredient() or preheat(). recipe() should really be called workflow() and takes a name.

A few days ago Nanopore sent me a technical challenge as part of their interview process. I’ve set up a a GitHub repo to track development progress. These are some notes before I go headlong into coding:

Day 1 – tech challenge received

The challenge involves building a DAG for a workflow. The first thing I did on receiving it was sketch out their example DAG to get a better feel for the behaviour of each node:

Forgive the quality of these scans, I’m cobbling this together fast! The first sketch highlighted the scope of the design for the DAG:

- Whether a task is manual or automatic.

- Functional behaviour (e.g. display message, move sample).

- Specification of ‘ports’ on device

- Input where user adds sample. I’ll call these input_a, input_b etc.

- Output where user removes sample. I’ll call these output_a, output_b etc.

- General input/output ‘cells’ which is where the device is moving samples around, which I’m assuming to be a 2×2 grid from seeing the VolTRAX videos. I’ll call these cell_1_1, cell_2_4 etc.

- Certain cells do work, e.g. heating, applying magnetic field. I’ll treat these like regular cells but give them special IDs like heater_1.

Example tasks and data required:

- Add ingredient (manual): show message; input port ID; destination cell to move sample to.

- Mix ingredients (auto): two registers to mix; cell where the sample ends up.

- Preheat (auto): heater temperature; heater ID if there is more than one.

- Heat sample (auto): input cell location; time on heater; header ID if there is more than one; output cell location.

- Extract sample (manual): show message; output port ID.

This highlights some missing data in the DAG, that the operations of moving between certain cells should perhaps be nodes in themselves. For example the ‘heat sample’ task would be simpler if it was split up using move tasks:

- Move sample from cell_1_2 to heater_1.

- Stay on (preheated) heater_1 for 2 seconds.

- Move sample from heater_1 to cell_2_3.

A few other things came up in this sketch:

- Being a DAG it needs to avoid cycles.

- The ports/cells give me a conceptual device to work with.

- Validation. If I know all the inputs and outputs then I can run a validation step to ensure that anything that uses a particular cell or input has had that cell or input set up; i.e. check hardware dependencies.

- To run the program the nodes will need to be sorted topologically. Not necessary if nodes are inserted in-order.

- My sketch at the bottom left is my thinking about organising parallel data tasks which let to builder concepts detailed in next post. I think I can get a nice DSL together.